Grid Search Optimization for Graph Neural Networks

In my last post, I was working on a Graph Convolutional Network to predict binding affinities between molecules and proteins. I have expanded on that code quite a bit. If you are only interested in the best model to date, you can find it here. While it can be improved with more epochs and more data (I only utilized a very small sample to save time), I was curious if other GNN architectures are better. I really wanted to create a grid search since I have never tried to in the past so here is my implementation of it:

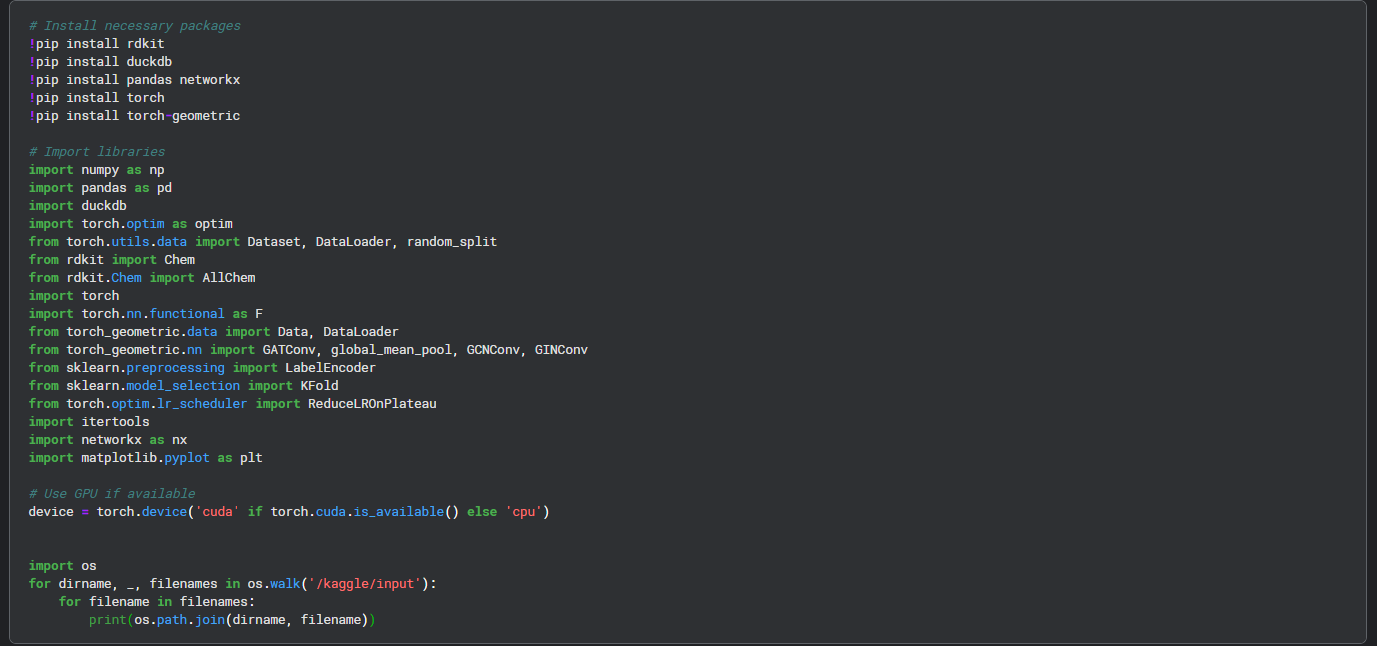

First, we have to install all the packages and libraries. Since I have to train a lot of models with different hyperparameters, I added GPU support to my notebook.

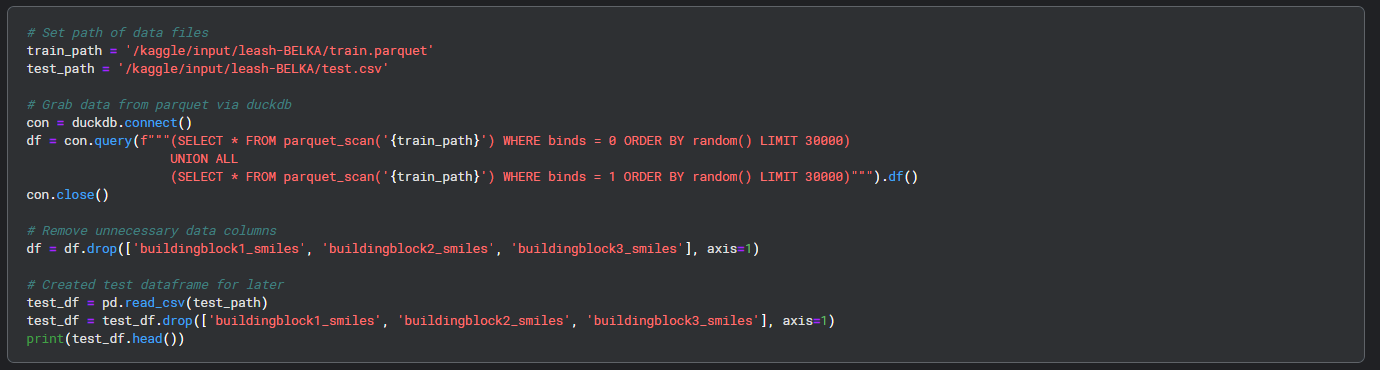

Then I extracted the data from the files and created dataframes for both the training and testing set.

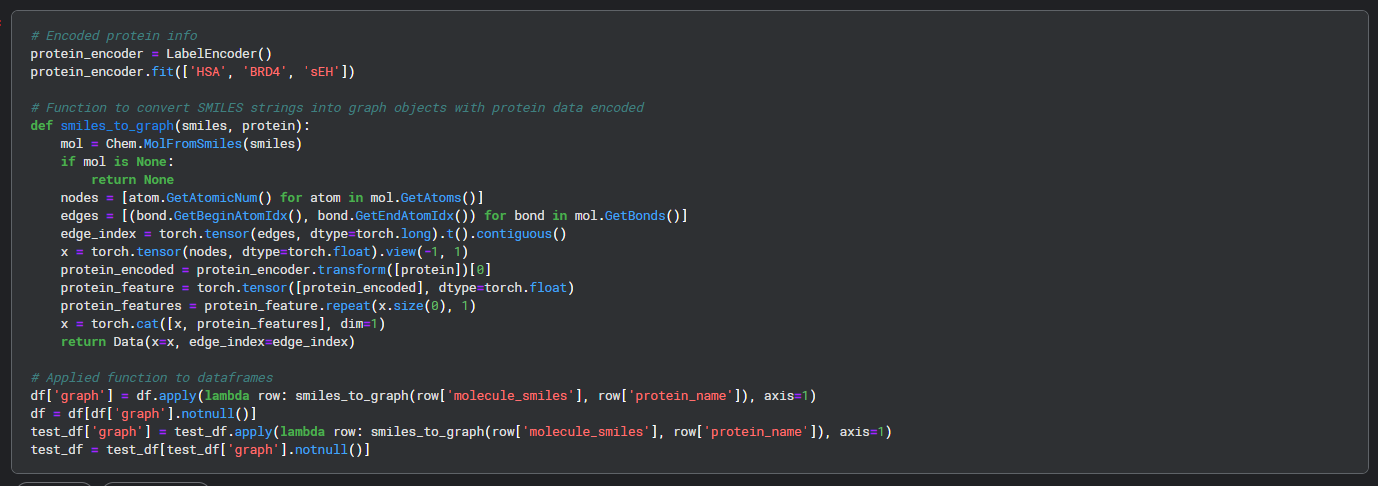

The next step is to encode the protein data and process it with the smiles strings to get the graphs encoded with the protein data.

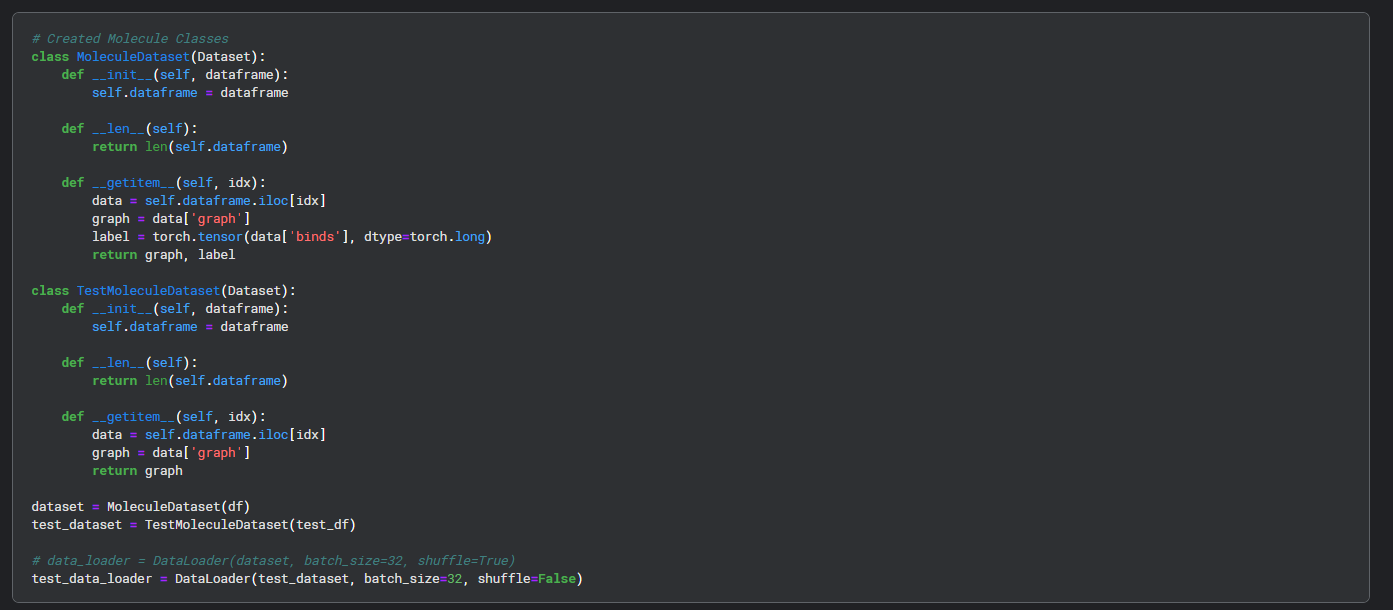

I created some classes to make working with the data easier.

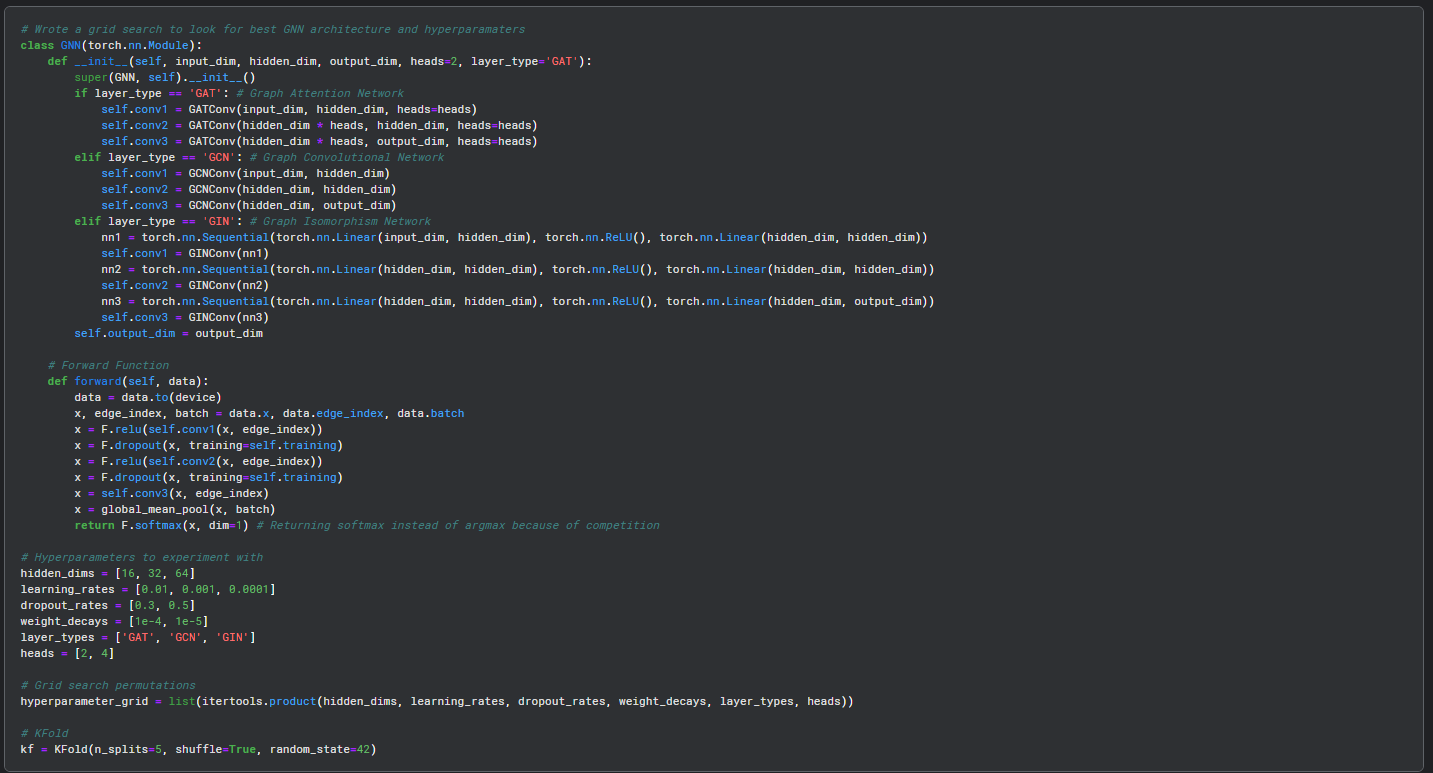

This is my implementation of grid search. It checks the layer types and creates a simple NN with a new layers based on the parameters. In the forward function, I define how the input is processed during training.

I first grab ‘x’ which is the node feature matrix. I also grabbed the ‘edge_index’ tensor that defines the edges between nodes , and ‘batch’ which keeps track of nodes in batch processing. I defined the first convolutional layer via ReLU activation, then I applied dropout to the output of the layer. I repeated this for a second layer before adding a third layer: x = self.conv3(x, edge_index). I then performed global mean pooling to aggregate the node features for each graph - making a single feature vector to help make predictions. Finally, I added a softmax activation function to produce a probability distribution.

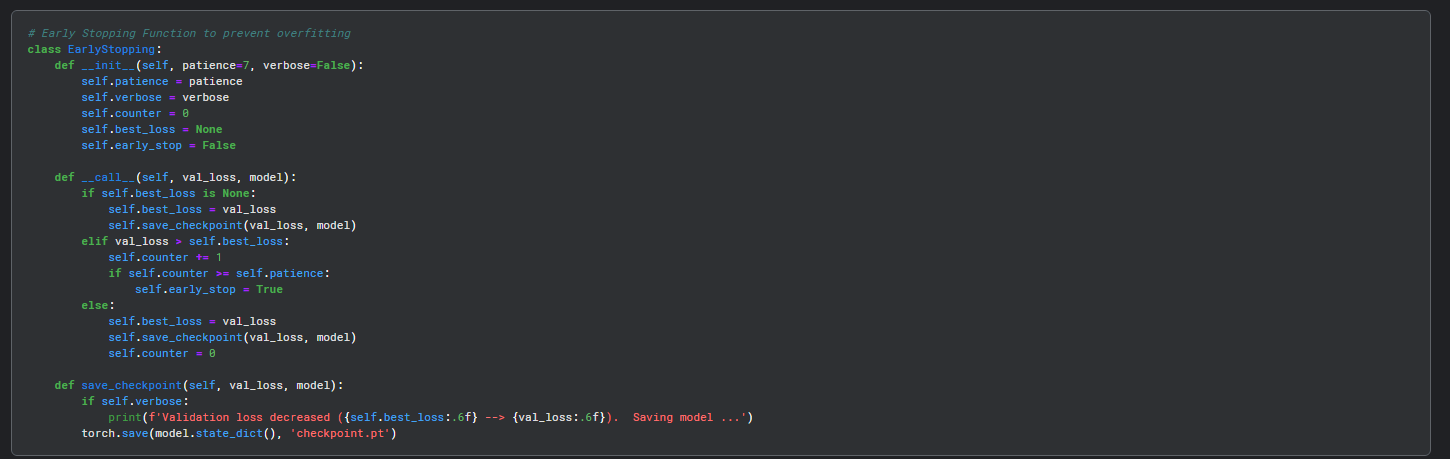

I save the best models and utilize early stopping to prevent overfitting from excessive training on data, especially since I only utilized a small portion of my dataset.

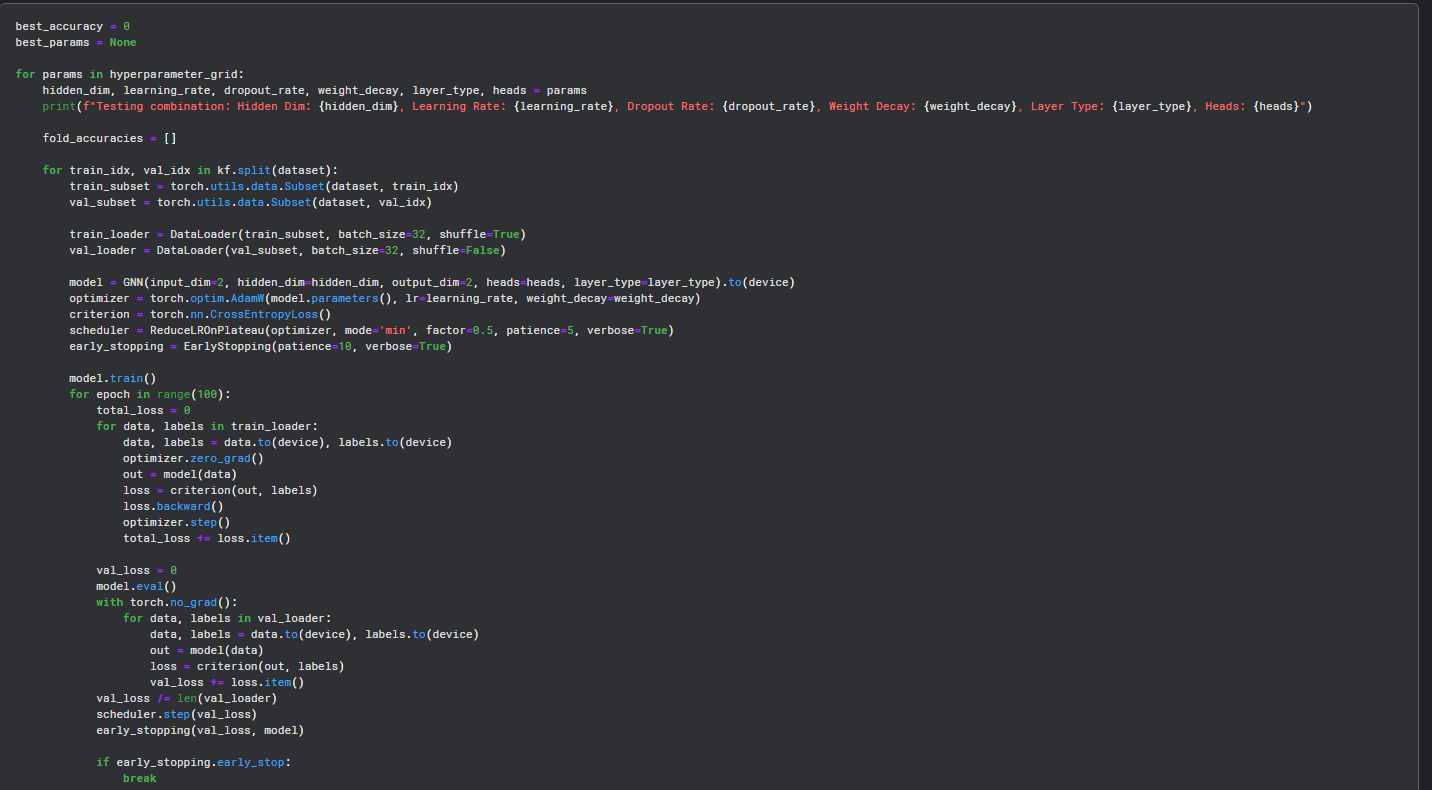

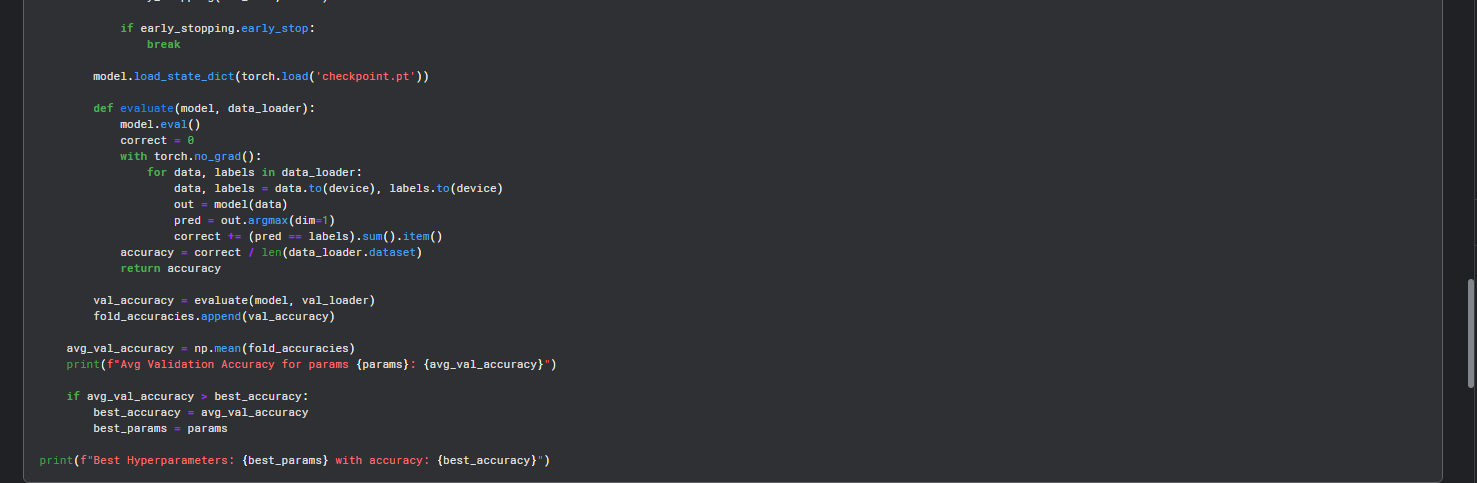

Time to actually train the model! With this code, we will train many models with different parameters.

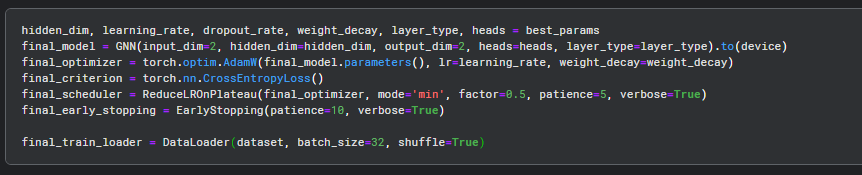

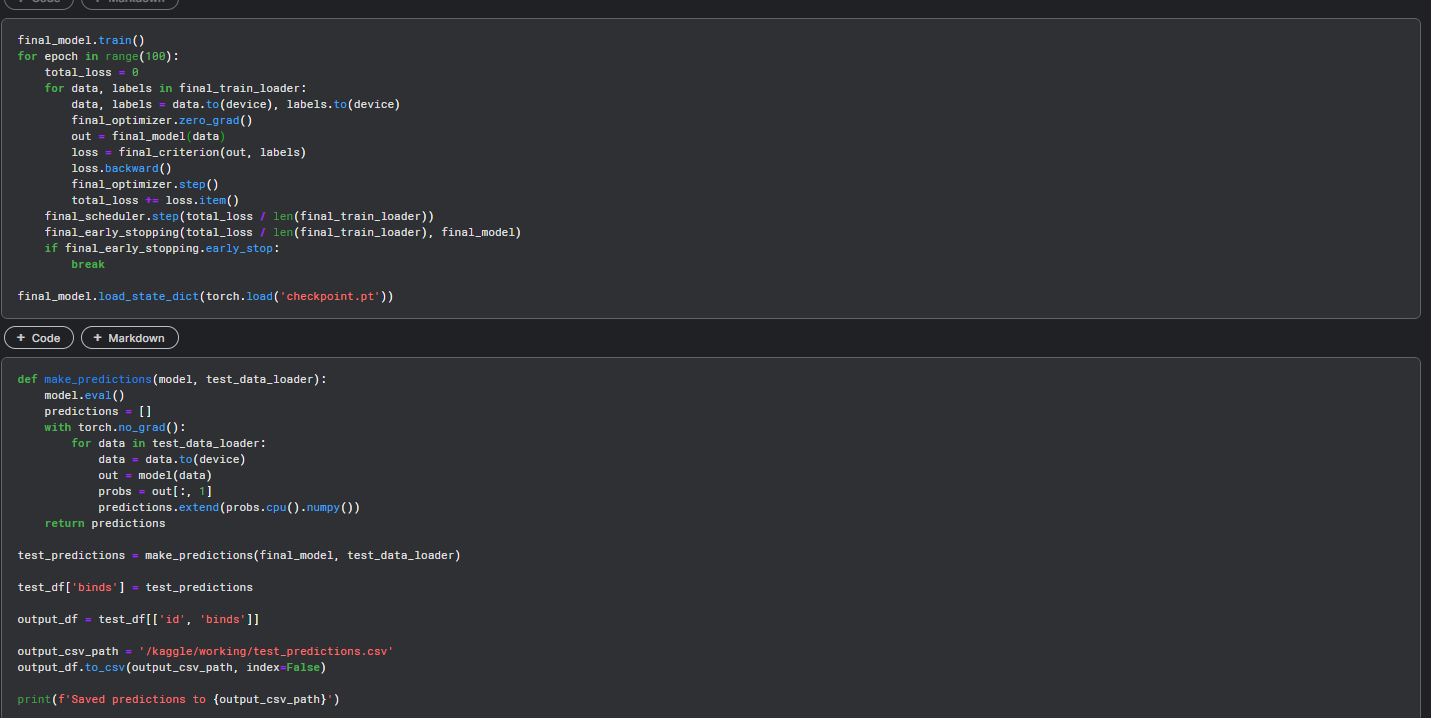

Once training is complete, we saved our parameters and we can train this model with more data and epochs!

Here is what that code might look like.

Overall, this project has taught me a lot of useful things. I created a complex grid search to test NN architectures and automate hyperparameter tuning. However, I think utilizing ECFPs and non-graph models may yield better results. I also recognize the value of building blocks data and will utilize that in my next model. To be continued!